Back in March I wrote about a few security issues with Google Docs while keeping some details private.

Google Security and the Google Docs product management team engaged me immediately after the issues became public, and kept me well informed of their findings through several days of productive exchange of ideas. I’m used to getting the silent treatment when reporting security issues, so I’d like to credit Google for keeping the lines of communications open.

I had been “on the road” since then and decided to take time off from blogging. Now that I’m back home, I’d like to close these issues before writing about a few other (non-Google) security & privacy concerns I have in mind.

So without further delay let’s revisit the three Docs issues based on my emails with Google back in late March and early April. I understand that Google have made changes to remediate part or all of these issues, according to their own risk determination.

1. No protection for embedded images

This issue was about the lack of protection (authentication) for images embedded in a document, and an image’s continued existence on Google’s servers after its containing document has been deleted. The lack of authentication means that the image URL could be accessed by 3rd parties without the document’s owner consent.

Google correctly noted that the image URL would have been known only to those with previous access to the image, and someone with such access could have saved the image anyway, and perhaps disclosed the saved image with unauthorized persons.

However, from a privacy perspective, there is a crucial difference between a “saved” image being disclosed, and one being served directly by Google Docs: evidence of ownership.

Let’s examine how a typical Docs image URL is constructed:

docs.google.com/File?id=dtfqs27_1f3vfmkcz_b (an image stored at Google)

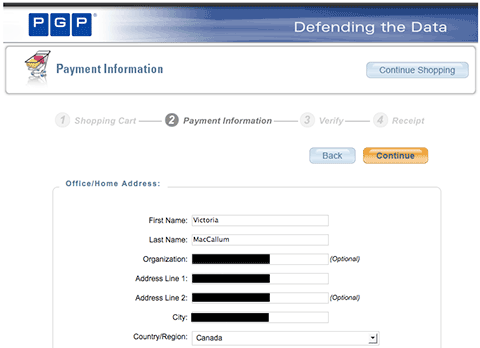

The bolded portion of the URL (“dtfqs27”) seems to uniquely identify the resource owner (in this case, me). Documents and images created by the same account will have this same ID as part of the URL.

Embedding “personally linkable” IDs in URLs is poor practice and has wide-ranging privacy implications on on its own — more on this later. Yet we’re going twice further here by: 1) associating the ID with a document resource; and 2) making the entire URL publicly accessible. This is a form of Insecure Direct Object Reference, a common security issue which I’ll have to say more about in the coming days.

Contrived scenario:

I share a picture of my company’s ultra-secret new tablet with a potential supplier. An employee of said supplier saves the picture and wants to sell it to AdeInsider.com, a rumor-site tracking my inventions. They accuse the employee of just making it all up in Photoshop. So the employee shares the link instead, e.g.:

docs.google.com/File?id=dtfqs27_4ghppz9dq_b

Since there is no authentication, AdeInsider.com can now widely publish that link, and point out to their readers that the image on my blog has the same unique identifier, thus positively determining ownership. Instant privacy breach. (Instead of a secret gadget, imagine compromising pictures, etc.) My only recourse is to get Google support to remove the image, since I can’t immediately do it myself by deleting the containing document. But any action on my part would have been too late, anyway.

As I noted in a previous post, I can only recommend defense-in-depth. In this case the lack of authentication — which appears benign by itself due to randomness in the URL — might cause a serious privacy breach due to another issue (leak of what is essentially personally identifying information.)

Tangent:

Tagging resources with IDs potentially linked to personal information is unfortunately a widespread practice, with Facebook being a big example. Like Google Docs, images uploaded to Facebook are tagged with the user’s ID, are accessible without authentication, and subject to the same privacy flaw. It’s trivial to map Facebook IDs to real names. From a privacy perspective, ID tagging might in some cases be more problematic than tracking cookies.

2. File revision flashback

I’m not going to add much more to this issue except to note that privacy breaches can occur due to designed behavior having non-intuitive implications to regular users — the old Microsoft Fast Save feature comes to mind, as well as a number of accidental disclosures involving PDF. The fact that someone can fiddle with an embedded image’s URL (normally buried in HTML) to get previous revisions is not obvious to your typical Docs user.

Google has added useful entries in their Help files and there are now explicit controls in the diagram tool.

3. I’ll help myself to your Docs, thanks

I reported that in some cases, a person removed from a shared document could add himself back to a document’s shared list without the owner’s permission or knowledge. This issue obviously garnered the most attention and as it turned out, was much more complex than I originally thought.

Google clarified that this behavior is proper when a document has the “invitations may be used by anyone” option enabled. The purpose of this option is to allow forwarding of invitations (e.g., for mailing lists), and essentially works by making the document public.

After Google’s clarification, I checked through my test documents, and sure enough, this option was enabled on them, explaining the behavior. There was only one problem: I had explicitly disabled this option when creating my test documents, yet somehow these documents became publicly accessible!

After additional analysis at the time, my findings indicated that:

– A race condition existed due to the way the document sharing control GUI was implemented. Most of the time, the Docs sharing control worked fine. However, in some cases the control could fail in three distinct ways: a) the “invitations may be used by anyone” option visibly re-enabled itself after being disabled, immediately prior to the user clicking “submit”; b) the option remained disabled on screen, but was incorrectly submitted as enabled; c) the GUI completely failed and became non-responsive (which is actually fine since that’s fail-secure.)

I was able to record screencasts of each failure type and submitted them for Google’s review.

– Compounding the issue, a different GUI problem could hide the fact that a deleted “sharee” has added himself back to a document.

In Google Docs there are several areas where a document’s sharing status can be seen, including from the main screen’s “folder view”, from the left-nav of the main screen, and from a document’s sharing dialog. When a sharee deleted a document (breaking the share) then immediately added himself back, the main folder view and left-nav will show that the document is no longer being shared when in fact it still is.

So weaknesses in the Google Docs user interface implementation could cause private documents invitations to be wrongly permissioned as public, and furthermore, deleted share participants could add him/herself back to documents without document’s owner noticing.

What are essentially simple UI flaws (which arguably should have been caught by developers and/or QA) now have security and privacy implications. This “escalation” is an inherent risk with collaborative applications, especially “cloud” applications which have world-shareable features.

I must state, the likelihood of a direct breach due to wrong permissioning is low. However, as Issue #1 demonstrated, even seemingly minor flaws could lead to privacy leaks. Indeed, documents incorrectly permissioned in this way are subject to the same evidence of ownership leak as the images in Issue #1.

From what I could tell, Google quickly implemented changes to fix part if not all of these issues. I have no visibility regarding how many documents were incorrectly marked public. Readers with highly sensitive documents should periodically review their sharing controls.